Early Simple (Static) Check of (Implementation) Models

Meta-Information

Origin: Darren Sexton / RICARDO

Written: October 2014

Purpose: To optimize model-based A&T by performing inexpensive (robustness) static checks as integrated part of the modelling, prior to performing more dedicated and expensive functional checks (that may fail because of modelling errors that could have been easily prevented).

Context/Pre-Conditions: nothing special.

To consider: Tools used for modelling should support static analysis.

Structure

Participants and Important Artefacts

Modelling Engineer: Engineer creating behavioral model for analysis, testing, or implementation of system under construction.

Static Analysis: A “push-button” effective analysis technique that may detect modelling errors, like

– Undeclared variables, type errors,

– Unreachable states or transitions,

– Unsatisfiable logics or transition guards,

– Value range violations, Division by zero,

etc.

Ad-hoc Simulation and Test: Simulation, animation, and test performed by the engineer to check that a model edit had the desired effect.

– Important new checks or properties elucidated at this point should be stored and used as part of the “full A&T” for regression purposes.

Full A&T: Verification of requirements and collected properties. This may be time consuming due to exhaustive model-check, or thorough simulation/test.

.

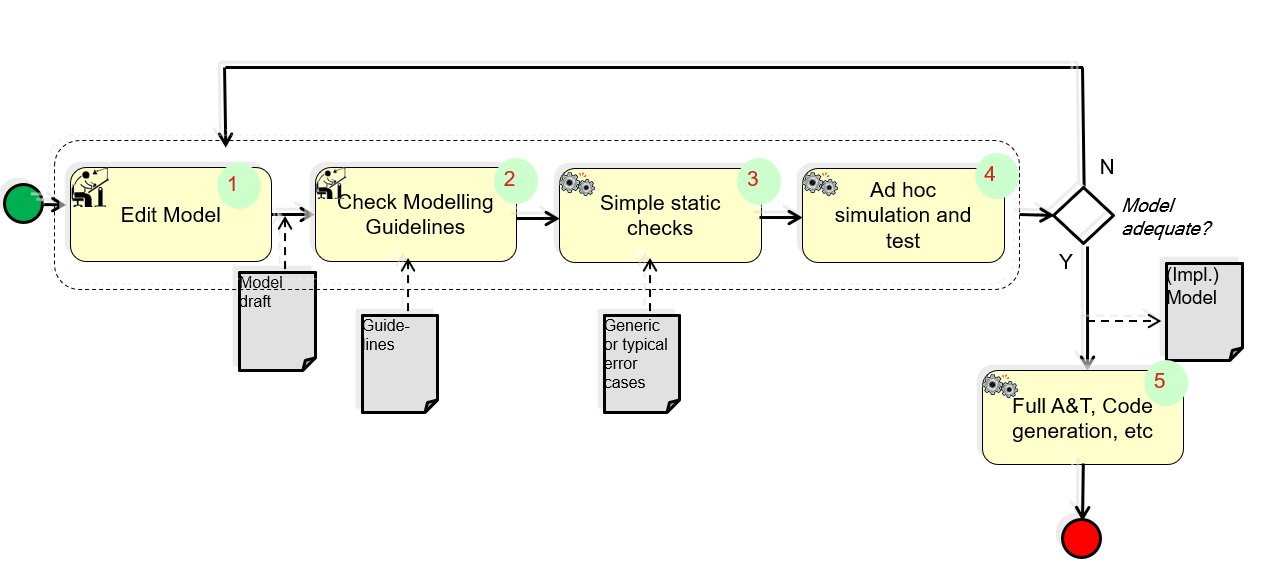

Actions / Collaborations

(1) The models are constructed by the authors; a key part of this method is that the model author uses entities 2, 3 (and also 4, but not so relevant so this method) as they develop the work.

A key point is that model development and early analysis are done iteratively and in parallel.

(2) Check modelling guidelines as the model is developed; this is much more efficient than checking them on a model that has already been developed.

Steps 2 & 3 are, in practice, typically run at the same time.

(3) Simple static checks: Checking for common errors as the model is developed can help engineers avoid repeating systematic mistakes as they further develop the model.

(4) Ad-hoc simulation and test should be performed as the model is developed, but, at each stage, only after the static analysis from steps 2 & 3 have been performed.

This is to avoid wasting time debugging test failures that are due to erorrs that could easily have been caught by static analysis.

(5) When the engineer has thus ensured that the model is adequate, it is subjected to the full, possibly time-consuming, A&T: Verification of requirements and collected properties. This may be time consuming due to exhaustive model-check, static analysis, or thorough simulation/test.

If a mistake is discovered, the process may fully or partly be repeated.

.

Discussion

Benefit: Less time consuming model-based A&T.

Comments (from Darren Sexton, edited by Wolfgang Herzner): Advanced static analysis techniques, such as model-checking or abstract interpretation, can be used to detect certain classes of issues within design / implementation models. Additionally, custom static checks (not based on formal methods) can be defined to detect many additional common modelling errors. Such analysis can often be performed as “push-button” checks, with little set-up overhead. The types of errors that can typically be found with such push-button analysis include:

Unreachable states within a state-chart: This is likely to indicate a requirement error, implementation error, unwanted functionality etc.

Unreachable logic within a state transition or other modelling construct: This is likely to indicate a requirement error, implementation error, unwanted functionality etc. or may be due to a functionally valid modelling construct but that will cause later problems when trying to achieve structural code coverage through testing.

Value range violations: This may indicate a design error or the incorrect selection of ranges.

Division by zero: This may indicate a design error or a lack of defensive modelling.

Common modelling mistakes: Linkage to incorrect variable during code generation augmentation.

Such checks are analogous to the checks often performed on code and many of the same considerations apply. In general, these types of checks can be beneficial if their set-up and usage effort is less than effort savings made through either reduced debugging or reduced scope of later verification activities. The set-up effort is typically low, however the effort to analyse the results can be increased if a large number of false alarms are present; this tends to be more of an issue with checks related to numerical values (e.g. range checks). Therefore it may prove more efficient to check only certain classes of error.

The analysis will perform better with some types of modelled behaviour or modelling style than with others. For example a decomposed, modular, software architecture will keep the models at a complexity amenable to analysis – i.e. it is necessary to “design for verification” [REF section]. Some types of behaviour or modelling constructs may prove incompatible with such analysis. In the case of an incompatible modelling construct there may be an acceptable alternative or approximation that can be used as a work-around; for a fundamental incompatibility it must just be accepted that not all software features can be checked in this manner.

Such analysis can be considered either as a official verification activity or a more unofficial one. In this case we mean that a official activity would require full review of the results, investigation of all false alarms, storage of results etc. allowing a claim to be made that all such errors classes are proved not present in this work-product. A more unofficial usage might treat the analysis as a “symbolic debugger”, used to quickly catch certain errors but not guaranteeing to prove the absence of all. Such an informal process would reduce the burden on checking false alarms at the expense of accepting some errors will be missed. This is acceptable depending on the claim being made from using the activity, the required integrity of the software and what later verification activities are to be performed. For example, if similar checks are already officially planned on the generated code, then any checks at the model level could be treated as a helpful aid only.

.

Application Examples

MBAT Automotive Use Case 5 (Transmission Controller Product Line) / Scenario 02 (Analysis of Implem. Model) and Scenario 03 (Automatic TCG):

Participants / Artefacts:

Modelling engineer

(Tools) Mathworks Simulink / Stateflow, targetLink for code generation

Static Analysis

Check modelling guidelines:

– (Tool) Mathworks ModelAdvisor (activity outside of MBAT)

– Operating on Simulink/Stateflow model

– Note: Existing activity outside the scope of MBAT

Simple static checks (robustness):

– (Tool) BTC-EmbeddedValidator

– Operating on TargetLink model

Simple static checks (common errors):

– (Tool) Mathworks ModelAdvisor (custom checks)

– Operating on Simulink/Stateflow & TargetLink models

Ad-hoc testing

(Tool) In-house test environment & built-in support from Mathworks & dSPACE tools

Operating on Simulink/Stateflow & TargetLink models

Note: Existing activity outside the scope of MBAT

MBAT Rail Use Case 1 (Automatic Train Control) / SC02 (System Validation – Create and check T&A model):

Participants / Artefacts:

Edit Model: MaTeLo Editor

Check Modelling Guidelines: manual

Simple Static checks: MaTeLo Editor & MaTeLo Testor

Ad Hoc Simulation and checks: MaTeLo Testor

Full A&T: MaTeLo

Relations to other Patterns

| Pattern Name | Relation* |

| MBT | This pattern helps to reduce modelling faults in MBT by inexpensive checks |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern