Model-Based Testing (MBT)

Meta-Information

Origin: Wolfgang Herzner (AIT) (extracted „MBT“-part from Brian Nielsen‘s „Patterns-template-examples.pptx“).

Written: November 2014

Purpose: Use models to guide and support testing of a target system (SUT – System Under Test).

Context/Pre-Conditions: Properties that shall be tested can be feasibly expressed in a formal way. Properties can be functional, e.g. whether the SUT reacts on a certain situation in a required way, or non-functional (extra-functional), e.g. whether reaction times stay within a given limit.

To consider:

– User needs be able to formalize the requirements sufficiently precisely to allow their representation with models.

– Selection of modelling language/notation may be difficult, as many alternatives exist.

– Experts should have sufficient expertise to create models correctly and at right level of abstraction. They could help to select modelling language/notation.

– This pattern concerns classical offline test generation wrt. test objectives/purposes or structural model coverage.

.

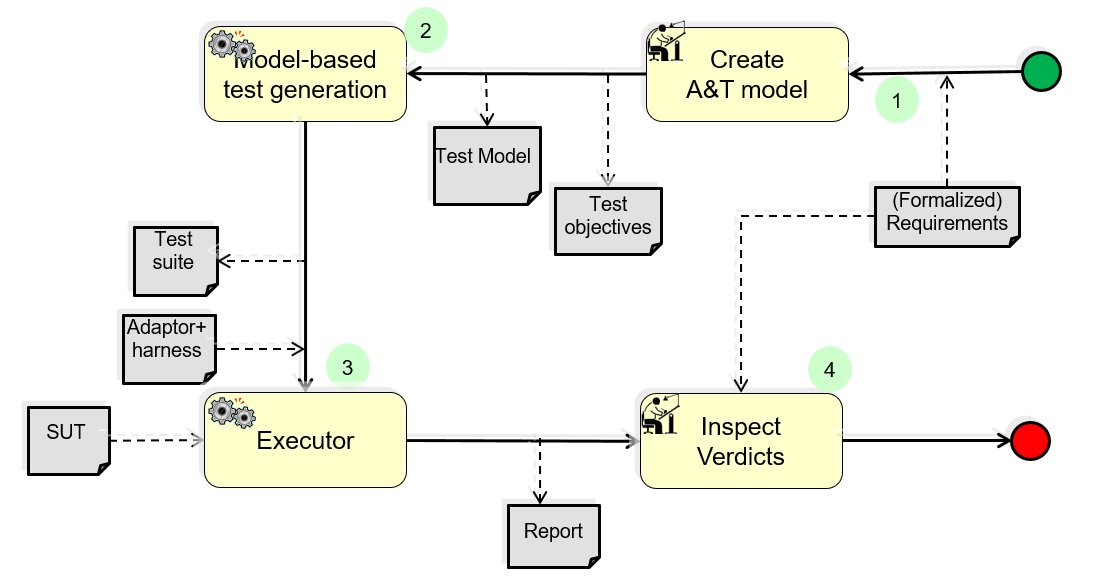

Structure

Participants and Important Artefacts

User: Person who provides the requirements; (s)he may also receive the reports.

A&T Model Engineer: Person who cooperates with the user to analyse the requ.s and creates the A&T (test) model that represents them in a formalised way.

Test Objectives denote what shall be tested; they are closely related with the concept of coverage. For MBT, the most important coverage criterion is “each requirement shall be tested at least once”. So, test objectives often represent requirements, but there may be an m:n relationship between requ.s and test objectives.

Test Suite: Set of test cases generated (TCG) from test model. Each test case contains at least input data, but for assessing whether the resulting behaviour conforms to requirements, expected results should also be present. If the latter cannot be expressed by explicit values, conditions such as “x > y” are used. Conditions about results are also called oracle.

SUT: The system to be tested.

Test Executor: Unit that applies test cases to the SUT and compares results with actual ones (i.e. executes the „verdict“). Usually, a so-called „test harness“ and “adaptor” is used, a test environment that feeds input data of a test case to the SUT and records outputs and behaviour of the SUT, e.g. by mocking services used by the SUT. Often, test experts cooperate with SUT experts in operating the test execution.

Adaptor+harness: Test environment into which the SUT is embedded in order to feed test data (Test Suite) and observe SUT’s reactions and behaviour.

.

Actions / Collaborations

(1) The A&T model engineer cooperates with the user during development of the test model, in order to understand the requirements and model them correctly.

(2) The test engineer uses the test model to generate test cases from it automatically. This may require feedback to the model engineer in the case of problems, e.g. that the requested coverage cannot be achieved, or the model is too complex.

(3) The test engineer cooperates with SUT experts (user) in operating the test execution environment. The test environment usually has to be adapted to the SUT, for which knowledge about the SUT is needed. Sometimes, also adaptations of the SUT have to be done, e.g. for logging and reporting.

(4) Taking oracles and test results, the verdict expresses whether a test passed (the test results fulfil the oracle), failed (this is not the case), or is considered as inconclu-sive. The latter occurs when the SUT reacts in a way not foreseen by the model such that the oracle cannot be applied. As there may be several reasons for that:

– the model doesn‘t reflect the specific SUT‘s behaviour,

– the test case generation doesn‘t consider the alternative the SUT has taken,

– the report isn‘t detailed enough,

at least on inconclusive test cases test experts need to interact with other experts to clarify this situation. On failed test cases, cooperation between involved persons is needed to identify and presumably to remove the reason.

.

Discussion

Benefits:

– Expressing requirements by a A&T model significantly helps to analyse requirements, clarify ambiguities, as well as to make requirements testable.

– Automatic test case generation with documented coverage is the biggest advantage of this pattern.

– Test cases can easily be re-generated when the requirements or SUT (and thus model) change as opposed to maintaining a lot of handcrafted scripts.

Limitations:

– Developing and maintaining an own test model aside the source code or even system models from which code is generated is often considered as (hardly acceptable) effort. This can be alleviated by using the system model as test model, but then it has to be verified that the latter is correct (see patterns Model-and-Refinement-Checking and MBT-with-Analysis).

– Guaranteed coverage of large model may be computationally difficult to achieve (big state space) and require many or long sequences.

Comments:

– Often, the test suite produced by the Model-based Test Generation is in a notation not directly usable by the Executor (sometimes named abstract test cases). It has then to be transformed into a format that is understood by the Executor, usually used as test scripts (also named concrete test cases).

– It is possible that abstract test cases contain features that are not supported by the Executor. For example, abstract tests may describe parallel tasks or asynchronous inputs, while the Executor is only able to control sequential I/O sequences. Then, either a corresponding conversion needs to be performed during transformation of abstract to concrete test cases, or the Test Generator is configured to use such features.

– The capabilities of the Executor constraint what can be expressed in concrete test cases.

– Customers as well as safety standards my require that each test case is argued. For that purpose, the MBT process needs to support tracking from requirements via the test model to the generated test cases.

– It is often required that after a small SUT modification only those test cases are changed which address modified behaviour (principle of regress testing). TCG then needs to consider this.

.

Application Examples

MBAT Automotive Use Case 1 (Brake by Wire): this pattern is used in its scenario 5 (TCG based on Test Model).

MBAT Automotive Use Case 7 (Hybrid Powertrain Control): this pattern is used in its scenario 5 (Model-based test case generation).

MBAT Automotive Use Case 8 (Virtual Prototype Airbag ECU) / Scenarios 1-4:

Participants / Artefacts:

User: Semi-formalized customer requirements, verification engineer

T&A Model Engineer: T&A model MoMuT::REQs, internal format

Test Objectives: (test purpose) Parameter entry in MoMuT::REQs, format text string e.g.: “operating_state=3”

Test Suite: TC in proprietary MoMuT::REQs format

SUT: Matlab Simulink Model

Test Executor: Matlab Simulink Test Adapter in internal format, Report in HTML

Description: The formal requirements gained by the writing requirements for formalization pattern represent the T&A model. With MoMuT::REQs the test cases for a specified test purpose / test objective can be generated. These abstract test cases are then translated further by a test adapter into the abstraction level needed for execution on the system under test model. After the test case execution, the test result is translated back into the higher abstraction level for interpretation. These abstract test cases are compared to the expected test results. Deviations and the coverage are reported.

MBAT Aerospace Use Case 5 (Degraded Vision Landing Aid System) with scenarios SC01 (Generate Test Model), SC03 (Automatic TC Generation) and SC04 (Virtual Testing (MiL)):

Participants / Artefacts:

User: Requirements are provided by the customer and captured in IBM Rational DOORS

The T&A Model Engineer develops a MaTeLo T&A test model by analysing the user requirements

Test objective is to have a full requirements coverage

Test cases are generated automatically from the MaTeLo model with MaTeLo Testor

Test suites are defined with the MicroNova EXAM Toolsuite (incl. Modeler and Testrunner)

SUT is a Rhapsody system model which has been developed from a system engineer based on the user requirements in DOORS

Test executor: the tests are executed on the system model (MiL) making use of MicroNova EXAM Testrunner and Reportmanager.

MBAT Rail Use Case 1 (Automatic Train Control): this pattern is used in its scenario 2 (System Validation – Create and check T&A model):

Participants / Artefacts:

Create T&A Model: MaTeLo Editor

Model-Base test generation: MaTeLo Editor

Executor: Mastria 200 Sol (In-house simulator)

Inspect Verdicts: ODEP + TARAM (In-house strip and analysis tool)

.

Relations to other Patterns

| Pattern Name | Relation* |

| Early Simple Checks of Implementations Models | That pattern helps to reduce modelling faults in MBT by inexpensive checks |

| Target MBA&T by Static Code Analysis | That patterns helps to concentrate TCG in suspected areas |

| Improve re-use of Test Assets by Product Line Eng. | MBT helps in detailing activity 4 of that pattern |

| MBTCG Coverage Completion | That pattern helps to improve coverage of generated test cases |

| Target MBT to failing test case | That pattern helps to genrate further TCs around failed test cases |

| MBT with Test Model Analysis | That pattern increases trust in test model |

| Model and Refinement Checking | Alternatives (both try to show conformance of impl. with model) |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern