Model and Refinement Checking

Meta-Information

Origin: Dejan Nickovic / AIT, Brian Nielsen / AAU, Wolfgang Herzenr / AIT

Written: October 2014

Purpose: Verify that an implementation satisfies formal properties through model-checking (Alternatively: Create a valid abstract model from an implementation) and testing .

Context/Pre-Conditions: A set of formalized requirements (correctly) expressed as properties that can be model-checked.

To consider: Requires high level of expertise, both when creating an abstract model, formulating properties and executing model-checkers, and refining the model based on test runs (CEGAR-principle) of counter-examples.

Structure

Participants and Important Artefacts

V&V Engineer: The person responsible for executing this workflow, and in particular creating/refining the models, formulating properties, interpreting analysis and testing results, debugging etc.

Model-checker: Tool that takes a model and a property, and checks whether it can verify the model or find a trace that violates the property. Such a trace is called “counter example”.

MBT: A procedure capable of determining (approximately) whether an implementation „conforms-to“ (e.g. trace inclusion) its specification model through testing.

Implementation: An executable system serving as the system under V&V.

Counter Example: A trace of the model generated by the model-checker exemplifying how the model may violate a given property; also called negative trace.

Specification model: An abstract behavioral model of the implementation, keeping essential properties of the implementation only.

Witness: A trace in a model that verifies a property; also called positive trace.

.

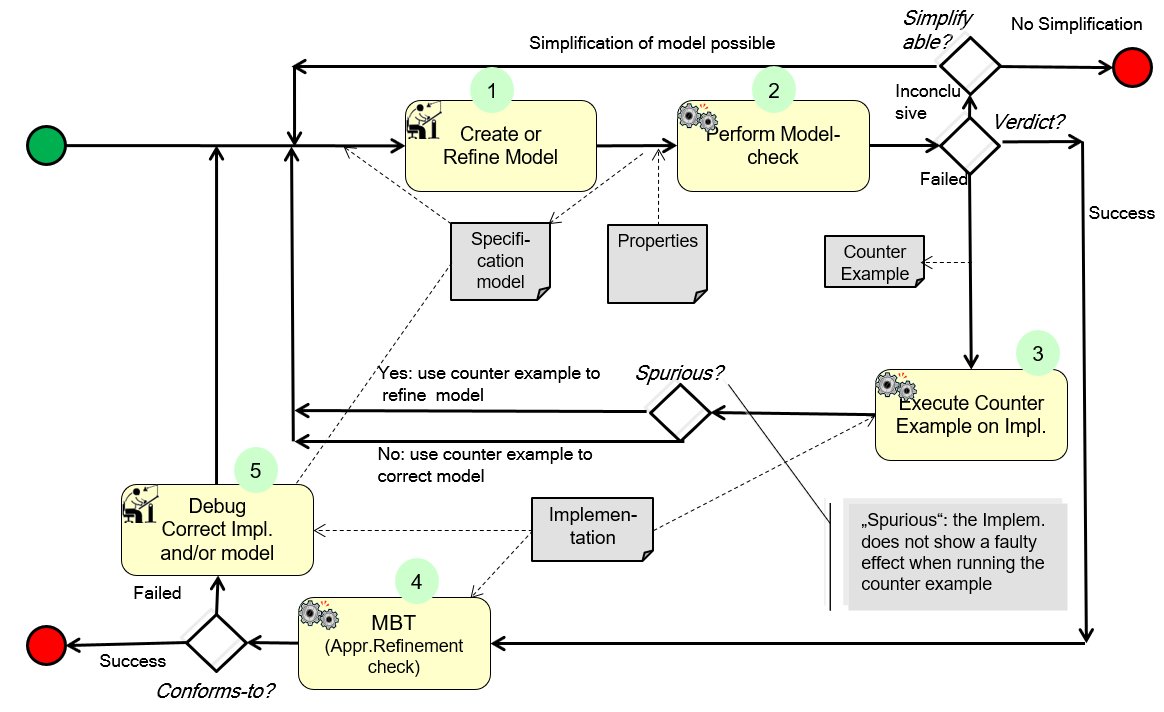

Actions / Collaborations

(1) The engineer creates a first proposal for the model.

(2) He applies model-checking against a set of predefined formal properties, giving a verification status for each property, possibly with a counter example.

– If the result is as expected (Success for all properties), the model is assumed to be a valid/good.

– If the result is inconclusive for some properties, he may attempt to simplify the model (apply abstraction, make state-space smaller, or re-phrase properties).

– If the model has a trace that is not desirable (e.g. generated as counter example to a property that should not be satisfied), the model is wrong, and possibly the implementation.

(3) He creates a test case based on the counter example, and executes it on the SUT, to determine whether the counter example is spurious.

– If the test shows that the trace is not in the implementation, then the model is too abstract, and the engineer needs to refine it.

– Else the model captures the wrong behaviour, and he should correct it – assisted by the counter-example.

(4) He applies MBT to check (by testing approximation) that the behavior of the implementation refines (in formal testing: conforms-to) its specification.

(5) If this is not the case he corrects the implementation (or the model, if the defect can be linked to a modelling error).

.

Discussion

Implications:

– The engineer is getting feedback from the implementation that helps him decide how to correct the model (CEGAR: Counter example guided abstraction refinement).

– It is ensured that the model satisfies its required properties, and that the implementation refines the model (testing approximation), and thereby that the implementation satisfies (testing approximation) the properties.

Comments:

– Feedback from implementation helps the modeller.

– There is some manual work required to determine whether a trace is desired or not, and whether a trace of the implementation is spurious or not.

– The original CEGAR pattern starts with abstracting the model from the implementation, which makes the refinement checking obsolete. However, if model and implementation are developed independently from each other, the refinement checking is needed.

– Find more detailed description in MBAT deliverable D_WP2.1_2_1.

.

Application Examples

MBAT Automotive Use Case 8 (Virtual Prototype Airbag ECU) / Scenarios 01-04:

Participants / Tools:

V&V Engineer: Verification engineer

Model-checker: MoMuT::REQs

MBT: The model consisting of formal requirements is refined because of new or changed customer requirements

Implementation: Model under test in Simulink

Counter Example: The contradicting formal requirements are highlighted

Specification model: The T&A model is updated according to the counter example

.

Relations to other Patterns

| Pattern Name | Relation* |

| Coverage Completion for MBTCG (“MBTCG Coverage Completion”) | This pattern helps to check the refinement relationship between model and implementation |

| Model-based Testing (MBT) | Alternatives. While this pattern generates tests from implementation model, MBT does this from A&T model |

| MBT with Test Model Analysis | Both patterns use same methods |

| Support Safety Analysis with Fault Injection | This pattern eases task of that pattern when verified model is modified |

| Requirements Formalization | That pattern helps to turn requirements into a test model |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern