KPI-Models based Validation

Meta-Information

Origin: Hans-Michael Koegeler / AVL, Andrea Leitner / AVL

Written: April 2019

Purpose: Apply DoE-based behavior models in order to reduce the number of test runs needed to validate the system (SUT).

Context/Pre-Conditions:

– V&V management; existing scenario database incl. valid parameter ranges and target KPIs.

– Availability of a working test platform (MiL, HiL, ViL).

To consider: It needs to be possible to control (start and stop simulation, initialize parameter values, retrieve KPIs, etc.) the simulation/test environment from an external tool.

Used Terms/Concepts: (ENABLE-S3 scenario definition)

Functional Scenario: e.g. “Cut in”

Logical Scenario: e.g. “Cut in from left behind”

Concrete scenario (= concrete Maneuver): e.g. “Cut in from left behind, Starting_conditions defined”

(= optional subcategory for a functional scenario)

Observables:

“Type a”: KPI´s to assess performance of the automated functions

“Type b”: Variation Parameter (Starting_conditions) for concrete Maneuvers

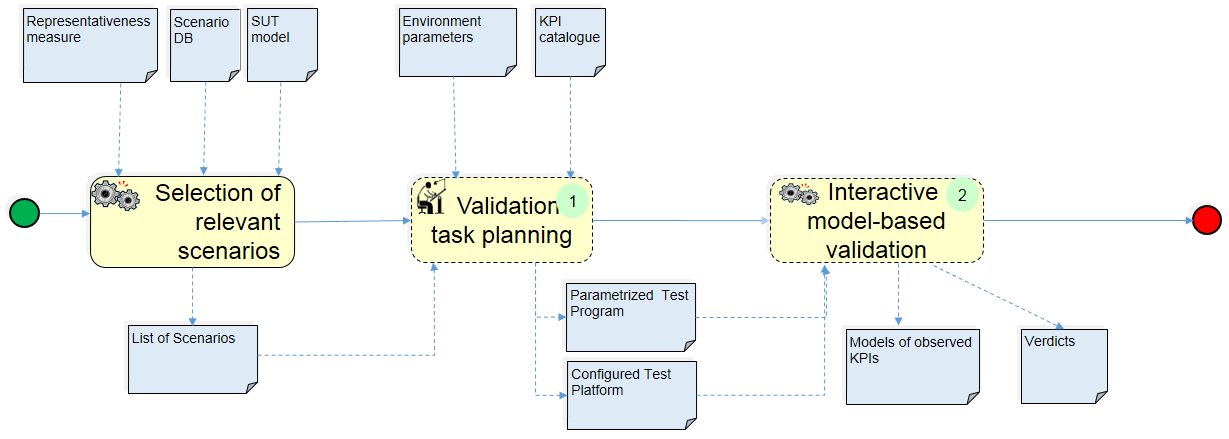

Structure

(1) Validation task planning:

(2) Interactive model-based validation:

Participants and Important Artefacts

V&V Engineer: The person responsible for executing this workflow, and in particular selecting scenarios, relevant parameter ranges and respective KPIs. Parametrizing test automation in collaboration with simulation engineer and evaluation of test results.

Simulation/Test Engineer: Responsible for setup and maintenance of simulation/test environment.

System Expert: Person who has detailed knowledge about the SUT.

Interactive DoE: Tool that builds behavioral models for selected KPIs focusing on the areas of interest.

Functional Scenario: general situational setting (with defined value ranges of relevant parameters).

Example: “ego-car on a highway with floating traffic not in the rightmost lane, and a vehicle cutting-in from the lane to its right”.

Key Performance Indicator (KPI): observable parameter/variable, together with requirements that it must not violate.

Example: “distance d of ego-car e to front car f”,

d always ≥ f(speede, speedf, d, weighte, friction-coeffstreet)”.

KPI (behaviour) model: representation of observed KPI values that allow to derive KPI values for non-tested input parameter value combinations.

Actions/Collaborations

The selection of relevant scenarios is the first step and is described in more detail in a separate pattern. See, for instance, Scenario-Representativeness Checking.

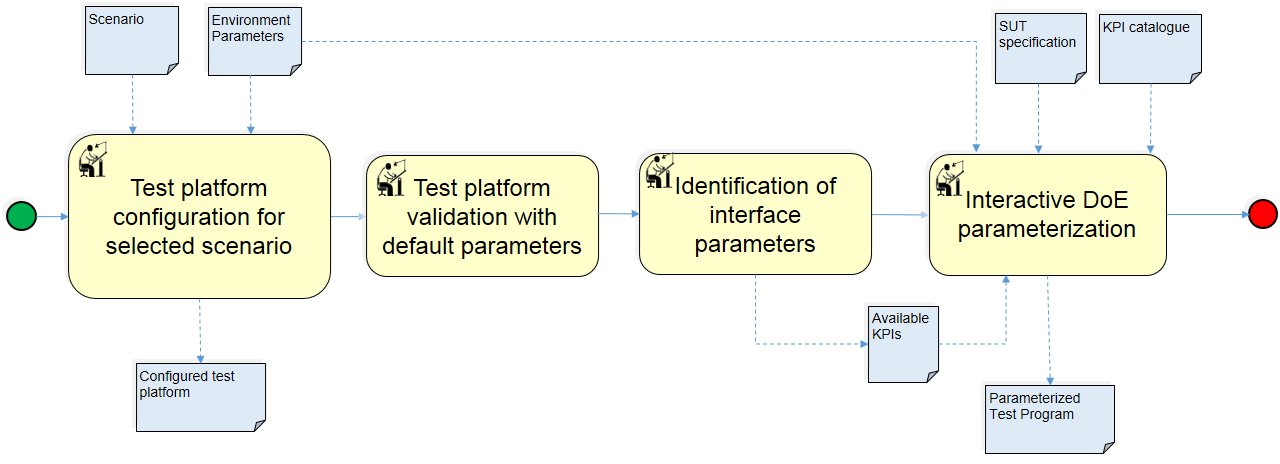

(1) Validation task planning:

(1.a) As a first step the default configuration of test platform (MiL, HiL, ViL) needed for the selected scenario needs to be set up and validated.

(1.b) Then a list of potential variation parameters needs to be retrieved from the testing/simulation environment (e.g. weather conditions which can be initialized from an external source).

(1.c) Set the possible parameter value ranges for the variation parameters as well as possible system limits (i.e. when working with physical components).

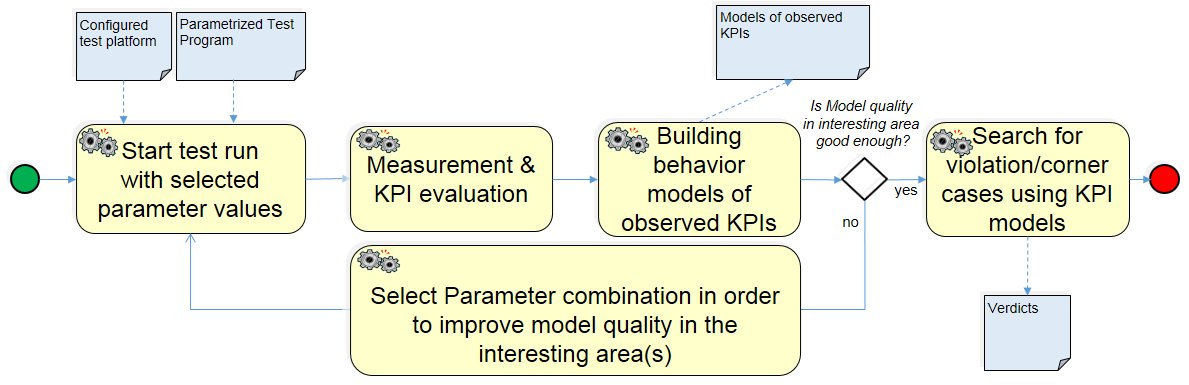

(2) Interactive model-based validation:

(2.a) Start test/simulation run with selected parameters.

(2.b) Measure and evaluate the results (KPIs defined as return value from the test run).

(2.c) Building behavior models for these KPIs in order to asses the influence of the input on the output.

(2.d) This process is repeated and refined in areas of interest.

(2.e) Finally, the behavioral models can be used to search for violation of certain target KPIs.

Discussion

– Essential for this pattern is the combination (DoE) of (a) smart selection of parameter values for as few test runs as possible, (b) building of KPI behavior models from test results that (sufficiently) correct represent real KPI behavior, in “Interactive Model-based Validation”.

– There, several methods are possible for selecting test parameter value combinations and, correspondingly, building KPI behavior models. One is outlined in the application example below.

– In order to improve the pattern, a statistical investigation of real driving situations is suggested to determine meaningful parameter value ranges.

– The last step in “Interactive Model-based Validation” is rendered as automatic, because in principle, checking for requirements violation can be automated. However, detailed manual investigation of the results (behavior models) is possible; for instance, to identify critical corner cases.

Application Example

ENABLE-S3 Use Case 1 “Highway Pilot”: The pattern has been used to validate a selected scenario.

.

Relations to other Patterns

| Pattern Name | Relation* |

| Scenario Representativeness Checking | That pattern yields “Representativeness measure“ input for first step in this pattern |

| KPI Catalogue Definition | That pattern provides the KPIs to be used |

| Formalized Analysis and Verification | Both patterns are alternative approaches |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern