SiL (Software in the Loop)

Meta-Information

Origin: Wolfgang Herzner / AIT, David Gonzalez-Arjona / GMV

Written: April 2019

Purpose: Testing the SUT software in a simulated environment for assessing its correct operation in a closed-loop setting under situations that are perhaps too risky or too rare in reality.

Context/Pre-Conditions:

– The environment model must have all relevant details.

– Interfacing of SUT software and environment model required.

To consider:

– Modelling the environment may be expensive and require sound expert knowledge. (It was, of course also used for the MiL step.)

– If the SUT software operated in real-time but the environment model does not, this gap has to be bridged.

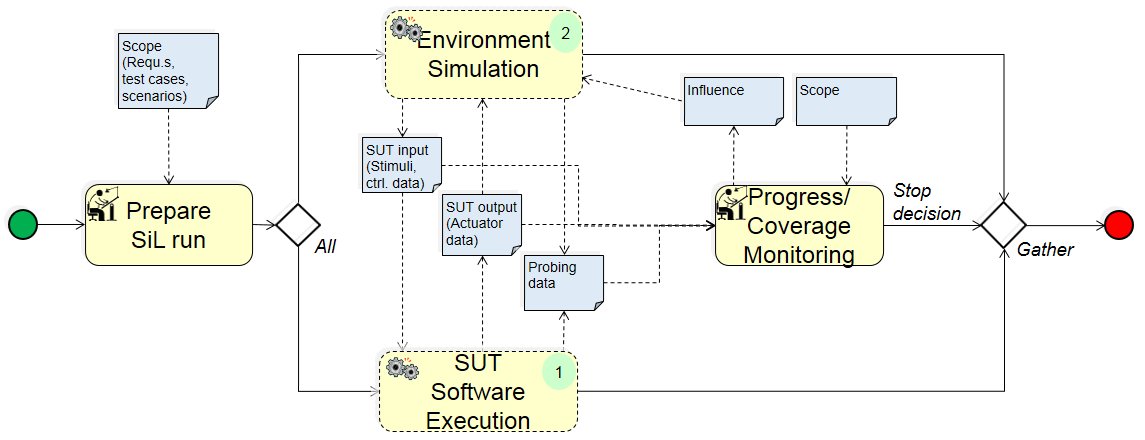

Structure

(1) SUT Software Execution:

(2) Environment Simulation:

Participants and Important Artefacts

Participants:

SUT Software: Software of target SUT, perhaps not in final form, but sufficiently evolved such that SiL results can be extrapolated for final system. If target SUT contains hardware, it can be simulated by software here.

Environment Simulation: executable formal description of the real world that is able to compute impact of SUT’s behavior on its environment and derive respective new stimuli for SUT (model). If the SUT is part of a larger system, the environment may include remaining parts of that system.

Engineer (expert): In charge of initializing the SUT software and environment model, monitoring progress and terminate the SiL process.

Artefacts:

Scope: set of requirements, test cases, and/or scenarios that shall be addressed during the SiL process. Note that this may include aspects propagated from MiL phase.

SUT input:

(a) stimuli representing environmental aspects measured by sensors, e.g. temperature, or “raw” data such as cameras images or radar measures.

(b) control data, e.g. provided by other components of the final system to the SUT. Input data have to be presented in a format that the SUT would receive in the final application.

SUT output (Actuator data): output by the SUT software, mainly explicitly to control actuators, e.g. amperage values for electro motors, but perhaps also implicitly, e.g. pollutant emissions.

Probing data: implicitly generated data characterizing current states of SUT and environment; for example, SUT speed or positions of obstacles. Can include parameter that allow effective progress monitoring and requirements adherence, e.g. KPIs (Key Performance Indicators).

Influence: Means of influencing ‘evolution’ of environment for covering scope. For instance, a requirement to increase number of obstacles, or change lighting conditions.

Actions/Collaborations

SiL run preparation: Specification of initial configuration such that the given SiL-scope can be covered.

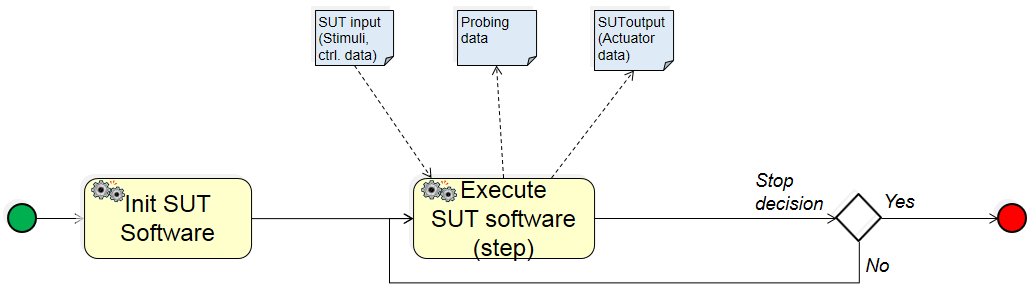

SUT Software Execution:

– Init SUT software: bringing it into the initial state.

– Execute SUT software (step): whenever it receives stimuli, it calculates resulting output (in particular, actuator control data) and presumably updates its inner state.

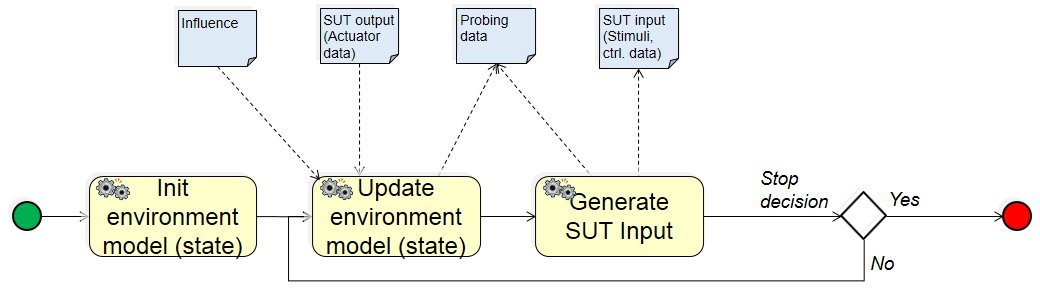

Environment Simulation:

– Init Environment model (state): bringing the model into the initial state.

– Update Environment model (state): whenever it receives the SUT output, it computes the impact of the SUT‘s behavior on the environment, and calculates the new environment state. And whenever it receives influence, it moves its internal state into the required direction.

– Generate SUT Input: computes and outputs updated stimuli, based on resulting state environmental state, as well as further SUT control data.

Progress/Coverage Monitoring: Assessing whether SiL run stays within scope, e.g. if requirements are not violated, and whether coverage is increasing. If the latter is unsatisfying, Influence can be emanated to the environment model.

Discussion

Benefits:

– The target SUT software can be evaluated without the need for producing any physical item or risking danger to environment.

– Support for quality surveillance during system construction.

Limitations: Development of environment model can be very expensive. And, in contrast to SUT model from which target SUT can be derived sometimes, it cannot be reused – besides for MiL and potentially other SUTs.

Comments:

Alternative execution: in the outlined workflow, SUT and environment model are executed alternatively. More overlapping execution cycles are possible, but then timing aspects (see below) are then to be crucially observed.

(Im)perfect Stimuli: depending on purpose of the overall XiL process, simulated sensor inputs can be perfect or defective. The latter will be used if robustness of sensor data processing is to be tested.

SUT output conversion: if the output of the SUT software, in particular actuator data, is provided in a format inappropriate for the environment model, it has to be converted. (For stimuli, this problem is presumably less critical.)

Timing control: if the environment model does not work in real-time, but the SUT software does so, it is necessary to simulate real-time feedback of the environment (i.e. stimuli update).

(Co-)Simulation Framework (not shown for simplicity): will be needed for timing control and data conversion.

Monitoring vs. logging: Instead of online monitoring as shown in the diagram, probing data can be logged and analyzed after SiL run.

SiL (run) termination: A SiL run can be terminated in different ways: (a) after a certain time of SUT execution, (b) when some rule or requirement is violated, (c) if it turns out that not the whole scope can be covered, (d) the whole scope is successfully covered.

With (c) and (d), the whole process is progressed to XiL (propagating any uncovered aspects under (c)); with (b), the whole process is stopped; and with (a) either the next SiL run is prepared of the intended scope is not completely covered yet.

If timing issues cannot be solved satisfyingly, an alternative is to skip SiL and go directly to HiL.

Application Example

ENABLE-S3 UC8 “Reconfigurable Video Processors for Space”:

xx– Image Processing Module

.

Relations to other Patterns

| Pattern Name | Relation* |

| Closed-Loop Testing | Description of overal XiL-process. Is super-pattern of this pattern |

| MiL (Model in the Loop) | Previous pattern in XiL-process |

| HiL (Hardware in the Loop) | Next pattern in XiL-process |

| Test Plan Specification | Supports allocation of requirements to SiL-level |

| Scenario-based V&V Process | Supports allocation of test scenarios / cases to SiL-level |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern