MBT with Test Model Analysis

Meta-Information

Origin: Brian Nielsen / Aalborg University, completed by Wolfgang Herzner / AIT

Written: July 2014

Purpose: Increase confidence in generated tests by analysing (model-checking) the model from which tests are generated (valid tests that satisfy requirements, failing tests likely caused by wrong implem.).

Context/Pre-Conditions: Model-based test case generation (MBTCG) is used as described by pattern ‘MBT’.

To consider:

– It depends on chosen modelling language/notation how easy and efficiently this pattern can be applied.

– Test (modelling) experts need some expertise in model checking.

.

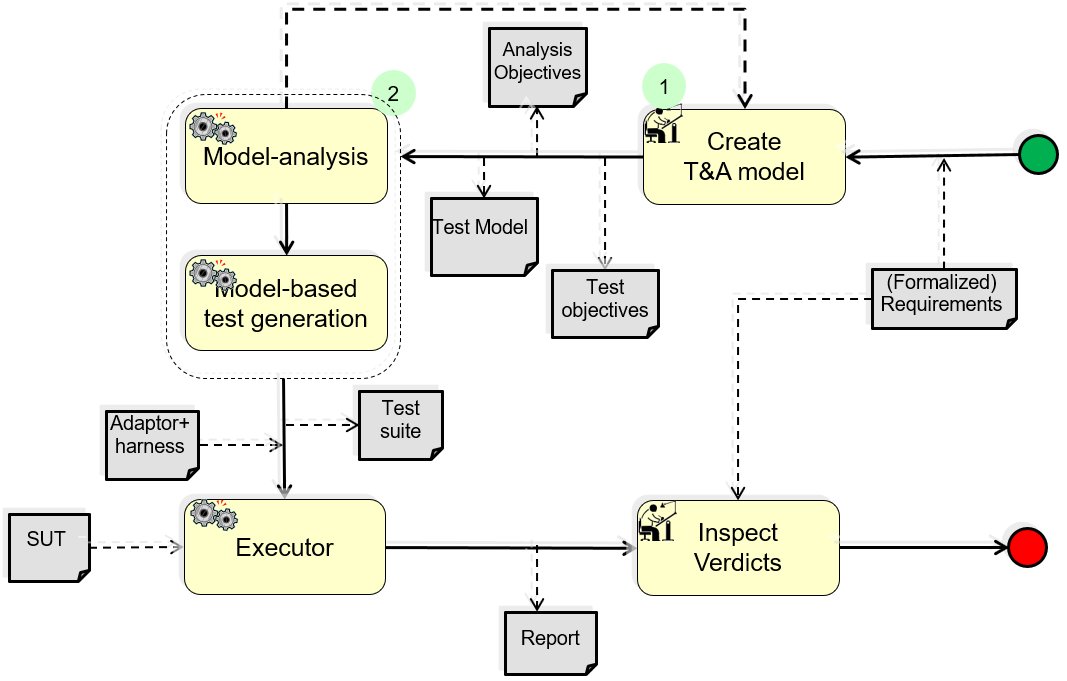

Structure

Participants and Important Artefacts

Only those are described here that operate in addition to those described in pattern ‘MBT’.

Analysis objectives: Properties that have to be possessed by the test model. They are derived from requirements by the T&A model engineer, and should be formulated such that they can be proven by model checking. Additional properties are generic checks like alle edges/locations reachable, abscense of deadlocks.

Model analyser: Process that examines whether the test models meets the analysis objectives. A typical technique used here is model checking.

.

Actions / Collaborations

Only those are described here that operate in addition to those described in pattern ‘MBT’.

(1) The T&A model engineer defines the analysis objectives from the requirements, perhaps in cooperation with the customer.

(2) The model-analyser is usually a model checking process (controlled by a human expert) that examines the model whether it meets an objective or it find a counter example (path through the model) that violates the objective.

.

Discussion

Benefits: Successfull checking of analysis objectives increases trust in correction of subsequently generated test cases significantly. In particular:

– if a test case fails it can (most likely) be assumed that the implementation is wrong,

– if a test case passes confidence is high that the implementation actually conforms to the requirements.

Limitations:

– Model checking is expensive with respect to memory and CPU time, and may fail for more complex test models.

Comments:

– Of course, if model checking shows that the model violates an objective, it should be corrected. This is indicated by the dashed path in the structure diagram.

– If model checking fails for a certain analysis objective, AT-pattern “Use-test run to slice model” could help.

.

Application Examples

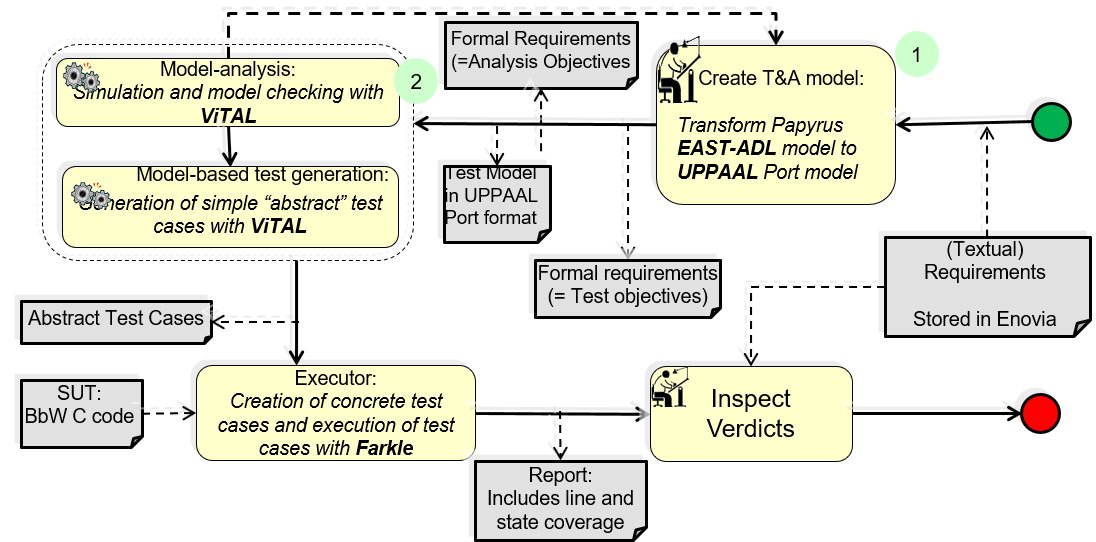

MBAT Automotive Use Case 1 (Brake by Wire) / Scenarios 2 (Analysis on the Analysis Model) and 5 (TCG based on Test Model)

Participants / Tools:

User: VOLVO

Tools: ViTAL (MDH); Farkle (Enea/XDIN/ALTEN)

MBAT Automotive Use Case 8 (Virtual Prototype Airbag ECU) / Scenarios 1-4:

Participants / Tools:

Analysis of Objectives

– The T&A model is checked for internal consistency with MoMuT::REQs.

– The T&A model, which was generated with UPPAAL, is verified against formal expressions entered by the verification engineer in order to validate this T&A model. Thereby also generic model properties like absence of deadlocks are analyzed.

Model Analysis

– MoMuT::REQs

– UPPAAL

MBAT Aerospace Use Case 4 (Flight Management System/UAV) / Scenarios 2 (Analysis and test for “system architecture” validation) and 3 (System testing validation):

Participants / Tools:

Create T&A model: Analysis Model: ALES FSV-BCL Toolbox, Test Model: ELVIOR TestCast Generator

Model Analysis: ALES FSV-Formal Verification

Model based Test Generator: ELVIOR TestCast Generator

Executor: ELVIOR Test Cast TTCN-3 (SC02) + AAEM Test environment (SC03)

Formalized requirements: SYSML requirement model: AAEM

Test model: SYSML statechart for POF functionality: AAEM

Test objectives: List of test criteria: ELVIOR TestCast Generator

Analysis objectives: List of derived requirements

Test suite: Selenium WebDriver

Adaptor+harness: TTCN3 functions: ELVIOR TestCast generator (SC02) + Executor to AAEM test environment adaptor: AMET (SC03)

SUT / Model generated executable: on host (C code): AAEM (SC02)

SUT / Real system executable: on OBMC board (C code): AAEM (SC03)

Report: Test results, analysis verdicts + counter examples: ALES + ELVIOR

MBAT Rail Use Case 1 (Automatic Train Control) / Scenario 2 (System Validation – Create and check T&A model):

Participants / Tools:

Create T&A model: MaTeLo Editor

Model Analysis: Model checking MaTeLo

Model based Test Generator: MaTeLo Editor & MaTeLo Testor

Executor: Mastria 200 Sol (In-house simulator)

Inspect Verdicts: ODEP + TARAM (In-house strip and analysis tools)

Comments: Model analysis is based on model checking function of MaTelo, it doesn‘t examine whether the test models meets the analysis objectives. It checks only some points, namely:

– The “Invoke” and “Terminate” state number.

– No outgoing transitions on the “Terminate” state.

– No entering transition on the “Invoke” state.

– A state is not a destination of duplicated transitions.

– For each profile chosen in the model checking, test if the sum of probabilities at the transitions leaving a state equals 1.0.

.

Relations to other Patterns

| Pattern Name | Relation* |

| MBT | This pattern complements (extends) MBT |

| Use Test Run to Slice Model | If the model is too large for analysis, that pattern can help to split test model such that parts can be analysed |

| Model and Refinement Checking | Both use same methods |

| MBA-Based Runtime Behaviour Verification | Also analyses the test model, but for generating test cases rather than for assuring model correctness |

* “this pattern” denotes the pattern described here, “that pattern” denotes the related pattern